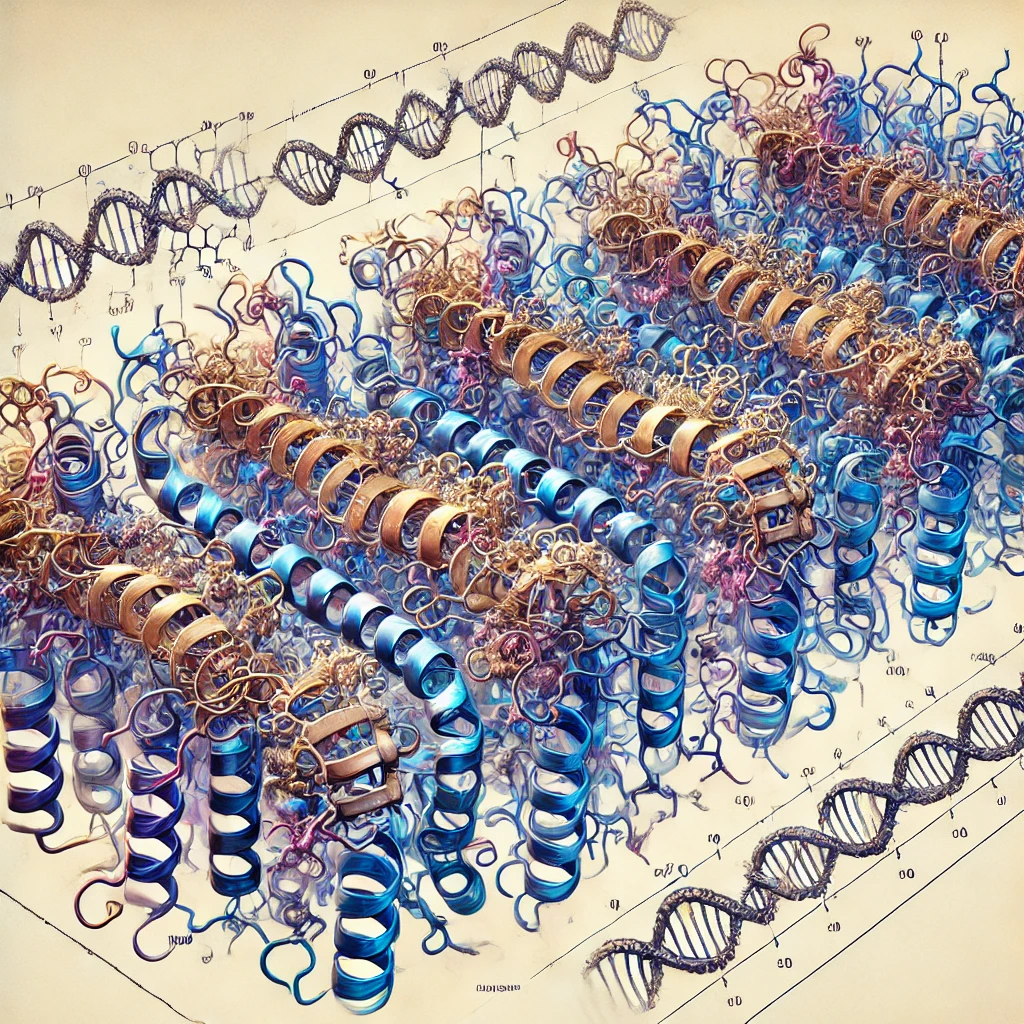

Think about this: There are more ways for a typical protein to fold than there are atoms in the observable universe. By a lot. Not twice as many — for a small 100 amino acid protein, we’re comparing roughly 1080 atoms in the universe to approximately 10300 possible configurations. For a single protein.

Yet somehow, proteins in your body are folding correctly, right now, in microseconds. Even more bizarrely: In 2022, DeepMind’s AlphaFold system essentially solved this seemingly impossible problem, releasing predictions for nearly all catalogued proteins known to science — over 200 million protein structures. But here’s the catch — we can’t really explain how it does it.

Let me explain why this is both amazing and concerning:

- The Scale of the Problem

o A typical protein has hundreds of amino acids

o Each amino acid can adopt multiple conformations based on its rotatable bonds

o For even a small protein, the number of possible configurations is astronomical

o Yet natural proteins find their correct fold reliably and quickly – this is Levinthal’s paradox - What Makes It Hard

o Real proteins fold in microseconds to milliseconds

o They have to somehow search an impossibly large configuration space

o They find the same final structure repeatedly and reliably

o This efficiency remained mysterious for decades - The AI Solution

o AlphaFold achieves atomic-level accuracy for many structures

o Its training database includes hundreds of thousands of known protein structures

o It dramatically outperforms previous computational methods

o But its decision-making process is distributed across millions of parameters - The Information Paradox

o We engineered the system that solved the problem

o We understand its training data and architecture

o We can verify its predictions experimentally

o But we cannot explain its internal decision process

o The solution exists in a mathematical space humans cannot visualize

This illustrates a profound shift happening in science. We’re entering an era where our most powerful scientific tools produce correct answers through processes we cannot fully comprehend.

This raises some fascinating questions:

• If we can’t understand how a solution works, do we truly understand the problem?

• As AI tackles more complex problems, will this become the norm?

• What are the implications for scientific understanding when our tools surpass our ability to comprehend them?

I find this especially intriguing as a physicist — it suggests we’re encountering fundamental limits not just in computation, but in human comprehension itself.